What to do after you get promoted into a leadership position should be a trivial question to answer, but in my experience the opposite is true. In fact sometimes it seems to me that leadership is some kind of taboo topic in the games industry. Making games is supposed to be creative and fun and people would rather not talk about a ‘boring’ topic like leadership, but everyone who has had a bad supervisor at some point will agree that lack of leadership skills can be incredibly harmful to team morale and therefore to the game development process. That’s why, when I was first promoted into a leadership position, I set myself the goal to be just like the awesome supervisors I had in the past. But what made these people a great boss? I had no idea, but I assumed I would figure it out myself along the way. Looking back at it now I have to admit I was quite naïve.

After learning more about the theory and practice of leadership I realized that I was unprepared for this role and I’m not the only one with this experience. Before I started writing this article I talked to several leads (or ex-leads) and none of them had ever received any kind of leadership training. Some people were lucky enough to have a mentor, but even that doesn’t seem to be the standard. To me the most troubling fact is that none of the leads were ever told what was expected of them in their new role.

Given how important this role is you would think that game studios would invest some time and money to train their leads, but that doesn’t seem to be the case. The optimistic interpretation is that the companies trust their employees enough to quickly pick up the required skills themselves. The pessimistic interpretation on the other hand is that management simply doesn’t care or know any better. The real reason is probably located somewhere in between these extremes, but it doesn’t change the fact that most new leaders are simply thrown in at the deep end.

When I was first promoted into a leadership role I really had no clue what I was doing or what I was supposed to do. I was a good programmer and a responsible team player (which is why I was promoted I guess) and I figured I should simply continue coding until some kind of big revelation would turn me into an awesome team lead. Obviously I never had this magical epiphany and after a while I realized I should probably start investigating leadership in a more methodical way.

My goal for this article is to share some of the lessons I learned myself while adjusting to my role as a lead programmer. If you were recently promoted into a leadership position hopefully you’ll find some of the content in this post helpful. If you had different experiences or have additional advice you’d like to share, then please leave a comment or contact me directly.

I want to emphasize the fact that leadership isn’t magic nor do you have to be born for it. Leadership is simply a set of skills that can be learned and in my experience it’s worth the time investment!

What is leadership anyway?

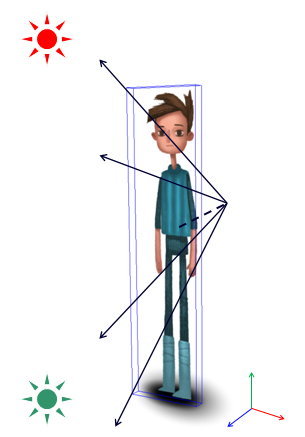

At the heart of a leadership position are people skills which make this role different from a regular production job. Being a great programmer, designer or artist doesn’t necessarily mean you are also an awesome team lead. In fact your production skills are merely the foundation on which you’ll have to build your leadership role.

But what exactly are these necessary people skills and what makes an effective team lead? Depending on who you talk to you’ll get different answers, but I think that the core values of leadership are about developing trust, setting directions and supporting the team in order to make the best possible product (e.g. game, tool, engine) with the given resources and constraints.

In order to be an effective lead you’ll first have to earn your colleagues trust. If your team feels like they can’t come to you with questions, problems or suggestions, then you (and the company) have a big problem. Gaining the trust of your team doesn’t happen automatically and requires a lot of effort. You can find some practical advice how to work on this in the ‘leadership starter kit’ below.

Similarly if your supervisor (e.g. project lead) doesn’t trust you, then he or she will probably manage around you which is a bad situation for everyone involved. In my experience transparency is crucial when managing up especially when things don’t go as planned. Let your supervisor know if there is a problem and take responsibility by working on a solution.

Making games is complicated and it would be unrealistic to assume that there won’t be problems along the way. Dealing with difficult situations is much easier if everyone on your team is on the same page about what has to get done. Setting a clear direction for your team is therefore a crucial part of your role.

A great mission statement is concise so that it’s easy to remember and explain. For an environment art team this could be “We want to create a photorealistic setting for our game” whereas a tools lead might come up with “Every change to the level should be visible right away”. Of course it is important that your team’s direction is aligned with the vision of the project, because creating a photorealistic environment for a game with a painterly art style doesn’t make sense.

In addition to defining a clear direction for your team one of your main responsibilities as a lead is to provide support for your team, so that they can be successful. This might seem very obvious, but the shift from being accountable only for your own work to being responsible for the success of a group of people can be a hard lesson to learn in the beginning.

Almost all leads I talked to mentioned that they were surprised by how little time they had for their ‘actual job’ after being promoted. It is important to realize that the support of your team is your actual job now, which means that you’ll have to balance your workload differently. Some practical advice for this specific issue can be found below in the ‘leadership starter kit’.

Support can be provided in many different ways: Discussing the advantages and disadvantages of a proposed solution to a problem is one example. Being a mentor and helping the individual team members with their career progression is another form of support. A third example is to make sure that the team has everything it needs (e.g. dev-kits, access to documentation, tools …) to achieve the goals.

As a lead you might also have to support your team by letting someone know that his or her work doesn’t meet your expectations. A conversation like this isn’t easy, but it is important to let the person know that there is a problem and to offer advice and assistance to resolve the situation.

What leadership isn’t

In order to avoid misconceptions and common mistakes it can be quite useful to define what leadership (in the games industry) is not. This topic is somewhat shrouded in mystery and there are many incorrect or outdated assumptions.

For example I thought for the longest time that leadership and management are the same thing. This is not the case though and when I talked to other leads about what they dislike about their role I found that most aspects mentioned were in fact related to management rather than to leadership. Of course it would be unrealistic to assume that you will be able to avoid management tasks altogether, but getting help from a producer can reduce the amount of administrative work significantly.

Another misconception that is often popularized by movies is that you have to demonstrate your power as a leader by barking out orders all day. This might work well in the army, but making video games requires collaboration and creativity and an authoritative leadership has no place in this environment. An inspired team is a productive team and autonomy is crucial for high morale.

Equally as bad is to ignore the team by using a hands-off leadership approach. This mistake is quite common since most team leads started their career with a production job. It can be tough for a new lead to accept the changed responsibilities, but in my opinion this is one of the most important lessons to learn. Rather than contributing to the production directly your primary responsibility as a lead is to support your team. Having time for design, art or programming in addition to that is great, but the team should always come first.

As a lead you are responsible for your team, which means that you’ll also have to deal with complications and it’s inevitable that things will go wrong during the production of a game. Your team might introduce a new crash bug or maybe you run into an unexpected problem that causes the milestone to slip. Whatever the issue may be as a lead you are responsible for what your team does and playing the blame game is the worst thing you can do, because it’ll ruin trust and team morale. Instead of shifting your responsibility to a team member you should concentrate on figuring out how to solve the problem.

Learning leadership skills

Now that we have a better understanding of what leadership is (and isn’t) it’s time to look at different ways of developing leadership skills. Despite the claims of some books or websites there is no easy 5-step program that will make you the best team lead in 30 days. As with most soft skills it is important to identify what works for you and then to improve your strategies over time. Thankfully there are different ways to find your (unique) leadership style.

The best way to develop your skills is by learning them directly from a mentor that you respect for his or her leadership abilities. This person doesn’t necessarily have to be your supervisor, but ideally it should be someone in the studio where you work. Leadership depends on the organizational structure of a company and it is therefore much harder for someone from the outside to offer practical advice.

Make sure to meet on a regularly basis (at least once a month) in order to discuss your progress. A great mentor will be able to suggest different strategies to experiment with and can help you figure out what does and doesn’t work. These meetings also give you the opportunity to learn from his or her career by asking questions like this:

- How would you approach this situation?

- What is leadership?

- Which leader do you look up to and why?

- How did you learn your leadership skills?

- What challenges did you face and how did you overcome them?

But even if you aren’t fortunate enough to have access to a mentor you can (and should) still learn from other game developers by observing how they interact with people and how they approach and overcome challenges. The trick is to identify and assimilate effective leadership strategies from colleagues in your company or from developers in other studios.

While mentoring is certainly the most effective way to develop your leadership skills you can also learn a lot by reading books, articles and blog posts about the topic. It’s difficult to find good material that is tailored to the games industry, but thankfully most of the general advice also applies in the context of games. The following two books helped me to learn more about leadership:

- “Team Leadership in the Games Industry” by Seth Spaulding takes a closer looks at the typical responsibilities of a team lead. The book also covers topics like the different organizational structure of games studios and how to deal with difficult situations.

- “How to Lead” by Jo Owen explores what leadership is and why it’s hard to come up with a simple definition. Even though the book is aimed at leads in the business world it contains a lot of practical tips that apply to the games industry as well.

Talks and round-table discussions are another great way to learn from experienced leaders. If you are fortunate enough to visit GDC (or other conferences) keep your eyes open for sessions about leadership. It’s a great way to connect with fellow game developers and has the advantage that you can get advice on how to overcome some of the challenges you might be facing at the moment.

But even if you can’t make it to conferences there are quite a few recorded presentations available online. I highly recommend the following two talks:

- “Concrete Practices to be a Better Leader” by Brian Sharp is a fantastic presentation about various ways to improve your leadership skills. This talk is very inspirational and contains lots of helpful techniques that can be used right away.

- “You’re Responsible” by Mike Acton is essentially a gigantic round-table discussion about the responsibilities of a team lead. As usual Mike does a great job offering practical advice along the way.

Lastly there are a lot of talks about leadership outside of the games industry available on the internet (just search for ‘leadership’ on YouTube). Personally I find some of these presentations quite interesting since they help me to develop a broader understanding of leadership by offering different ways to look at the role. For example the TED playlist “How leaders inspire“ discusses leadership styles in the context of the business world, military, college sports and even symphonic orchestras. In typical TED fashion the talks don’t contain a lot of practical advice, but they are interesting nonetheless.

Leadership starter kit

So you’ve just been promoted (or hired) and the title of your new role now contains the word ‘lead’. First of all, congratulations and well done! This is an exciting step in your career, but it’s important to realize that your day to day job will be quite different from what it used to be and that you’ll have to learn a lot of new skills.

I would like to help you getting started in your new role by offering some specific and practical advice that I found useful during this transitional period. My hope is that this ‘starter kit’ will get you going while you investigate additional ways to develop your leadership skills (see section above). The remainder of the section will therefore cover the following topics:

- One-on-one meetings

- Delegation

- Responsibility

- Mike Acton’s quick start guide

As a lead your main responsibility is to support your team, so that they can achieve the current set of goals. For that it’s crucial that you get to know the members of your team quite well, which means you should have answers to questions like these:

- What is she good at?

- What is he struggling with?

- Where does she want to be in a year?

- Is he invested in the project or would he prefer to work on something else?

- Are there people in the company she doesn’t want to work with?

- Does he feel properly informed about what is going on with the project / company?

You might not get sincere replies to these questions unless people are comfortable enough with you to trust you with honest answers. Sincere feedback is absolutely critical for the success of your team though which is especially true in difficult times and therefore I would argue that developing mutual trust between you and your team should be your main priority.

Building trust takes a lot of time and effort and an essential part of this process is to have a private chat with each member of your team on a regular basis (at least once a month). These one-on-one meetings can take place in a meeting room or even a nearby coffee shop. The important thing is that both of you feel comfortable having an open and honest conversation, so make sure to pick the location accordingly.

These meetings don’t necessarily have to be long. If there is nothing to talk about then you might be done after 10 minutes. At other times it may take an hour (or more) to discuss a difficult situation. Make sure to avoid possible distractions (e.g. mobile phone) during these meetings, so you can give the other person your full attention.

One-on-one meetings raise the morale because the team will realize that they can rely on you to keep them in the loop and to represent their concerns and interests. Personally I find that these conversations help me to do my job better since it’s much more likely to hear about a (potential) problem when the team feels comfortable telling me about it.

At this point you might be concerned that these meetings take time away from your ‘actual job’, but that’s not true because they are your job now. Whether you like it or not you’ll probably spend more time in meetings and less time contributing directly to the current project. Depending on the size of your company it’s safe to assume that leadership and management will take up between 20% and 50% of your time. This means that you won’t be able to take on the same amount of production tasks as before and you’ll therefore have to learn how to delegate work. I know from personal experience that this can be a tough lesson to learn in the beginning.

In addition to balancing your own workload delegation is also about helping your team to develop new skills and to improve existing ones. Just because you can complete a task more efficiently than any other person on your team doesn’t necessarily mean that you are the best choice for this particular task. Try to take the professional interest of the individual members of your team into account as much as possible when assigning tasks, because people will be more motivated to work on something they are passionate about.

Beyond these practical considerations it is important to note that delegation also has an impact on the mutual trust between you and your team. By routinely taking on ‘tough’ tasks yourself you indicate that you don’t trust your teammates to do a good job, which will ruin morale very quickly. Keep in mind that your colleagues are trained professionals just like yourself, so treat them that way!

Experiencing your entire team working together and producing great results is very empowering and it is your job to make it happen even if nobody tells you this explicitly. In an ideal world it would be obvious what your company expects from you, but in reality that will probably not be the case. It is important to understand that while you have more influence over the direction of the project, your team and even the company you also have more responsibilities now.

First and foremost you are responsible for the success (or failure) of your team and any problem preventing success should be fixed right away. This could be as simple as making sure that your team has the necessary hardware and software, but it could also involve negotiations with another department in order to resolve a conflict of interest.

One responsibility that is often overlooked by new leads is the professional development of the team. It is your job to make sure that the people on your team get the opportunities to improve their skillset. In order to do that you’ll first have to identify the short- and long-term career goals of each team member. In addition to delegating work with the right amount of challenge (as described above) it is also important to provide general career mentorship.

A video game is a complicated piece of software and making one isn’t easy. Mistakes happen and your team might cause a problem that affects another department or even the production schedule. This can be a difficult situation especially when other people are upset and emotions run high. I know it’s easier said than done, but don’t let the stress get the best of you. Rather than identifying and blaming a team member for the mistake you should accept the responsibility and figure out a way to fix the problem. You can still analyze what happened after the dust has settled, so that this issue can be prevented in the future.

It is very unfortunate that a lot of newly minted team leads have to identify additional responsibilities themselves. Thankfully some companies are the exception to the rule. At Insomniac Games, for example, new leads have access to a ‘quick start guide’ that helps them to get adjusted to their new role. This helpful document is publicly available and was written by Mike Acton who has been doing an exceptional job educating the games industry about leadership. I highly recommend that you read the guide: https://web.archive.org/web/20140701034212/http://www.altdev.co/2013/11/05/gamedev-lead-quick-start-guide/ (Original post: http://www.altdev.co/2013/11/05/gamedev-lead-quick-start-guide/)

Leadership is hard (but not impossible)

Truth be told becoming a great team lead isn’t easy. In fact it might be one of the toughest challenges you’ll have to face in your career. The good news is that you are obviously interested in leadership (why else would you have read all this stuff) and want to learn more about how to become a good lead. In other words you are doing great so far!

I hope you found this article helpful and that it’ll make your transition into your new role a bit easier.

Good luck and thank you for reading!

PS.: Whether you just got promoted or have been leading a team for a long time I would love to hear from you, so please feel free to leave a comment.

PPS: I would like to thank everybody who helped me with this article. You guys rock!